The reading pointers below I borrowed from my Google+ microblogs “Data Visualization” (https://plus.google.com/111053008130113715119/posts , 7090+ followers) and “Data Visualization with Tableau” (https://plus.google.com/112388869729541404591/posts , 1010+ followers).

1. Is the Avoidance of 3-D Bar Graphs a Knee-Jerk Reaction?

2. A modern view of Minard’s Map:

https://qonnections2016-charts.qlikcloud.com/57279cd9c22f280100294eaa/chart.html

3. Averages Aren’t What They Used to Be and Never Were

4. 6 questions with Burn-Murdoch:

http://www.visualisingdata.com/2015/09/six-questions-with-john-burn-murdoch/

5. Visart’s Demos: http://www.visart.io/demos/

6. Can We Trust Salesforce for Business in the Cloud?

https://marksmith.ventanaresearch.com/2016/05/14/can-we-trust-salesforce-for-business-in-the-cloud/

7. Pump Up Your Bump with Ranked Bars

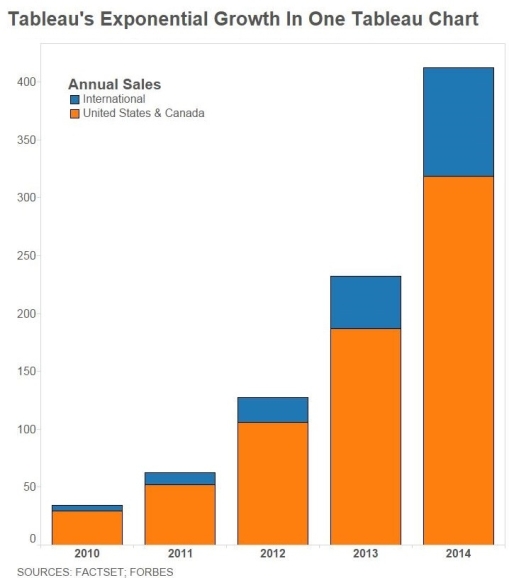

http://www.datarevelations.com/pumpyourbump.html

8. Dashboard Improvement Opportunities – Surface Observations

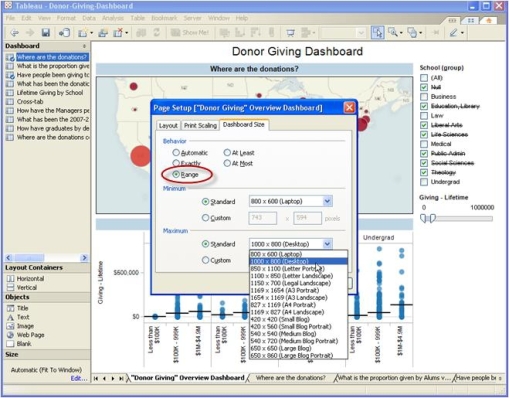

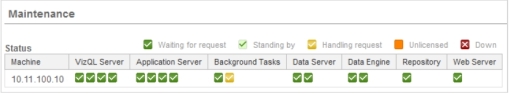

9. New in Tableau 9.3:

http://www.theinformationlab.co.uk/2016/03/24/11-new-features-to-look-forward-to-in-tableau-9-3/

also: http://www.thedataschool.co.uk/nai-louza/tableau-9-3-easier-mapbox-customization/

and http://databoss.starschema.net/version-control-revision-history-tableau-9-3/

10. Persuasion?

http://global.qlik.com/us/blog/posts/donald-farmer/direct-and-indirect-persuasion

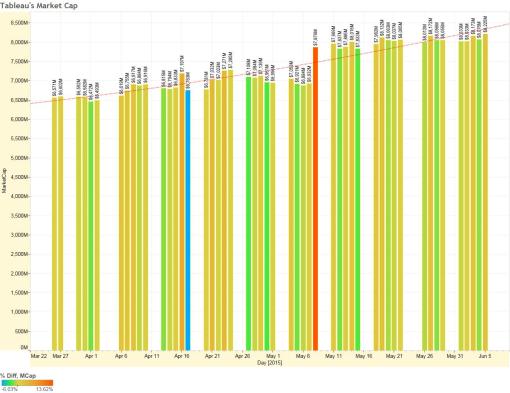

11. How Tableau Built a $3 Billion Data Empire On Top Of Beautiful Charts:

12. Tableau plus HyPer: “Something up their sleeve”

http://www.datadoodle.com/2016/03/22/tableau-plus-hyper-something-sleeve/

also http://bi-review.blogspot.com/2016/03/thoughts-on-tableau-acquiring-hyper.html

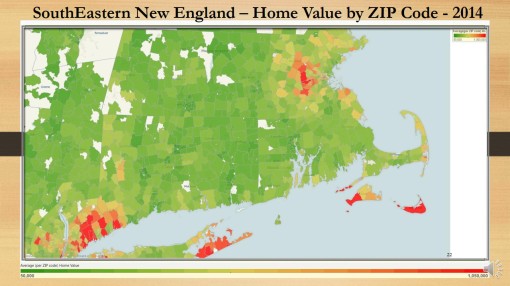

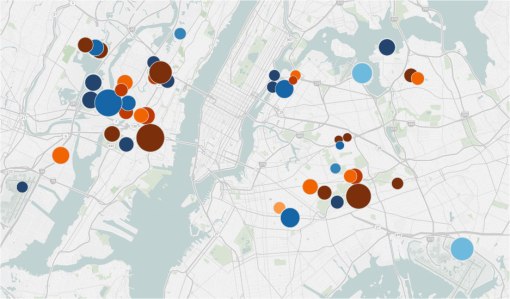

13. DV and mapping:

http://global.qlik.com/us/blog/posts/patrik-lundblad/data-visualization-foundations-mapping

14. DV Digest for March 2016:

http://www.visualisingdata.com/2016/05/best-visualisation-web-march-2016/

15. Farewell: http://blog.stephenwolfram.com/2016/01/farewell-marvin-minsky-19272016/

16. Wolfram about himself:

http://blog.stephenwolfram.com/2016/04/my-life-in-technology-as-told-at-the-computer-history-museum/

17.David Raab about SAS:

http://customerexperiencematrix.blogspot.com/2016/04/sas-by-sip-sas-viya-offers-open-apis-to.html

18. Dimensionality Reduction:

https://www.knime.org/blog/seven-techniques-for-data-dimensionality-reduction

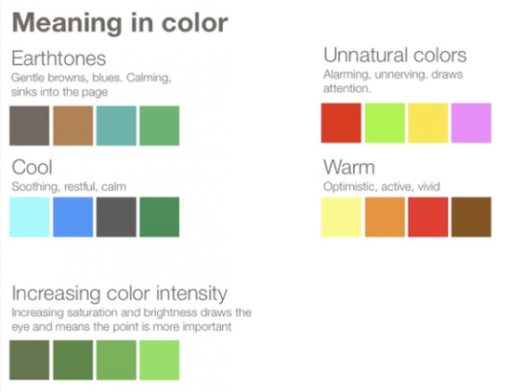

19. More about color: http://redheadedstepdata.io/color-innovation/

20. New in Spotfire 7.5:

also https://www.linkedin.com/pulse/new-tibco-ghislain-c%C3%B4t%C3%A9

and http://www.tibco.com/company/news/releases/2016/tibco-announces-key-analytics-advances-in-2016

21. 37 QLIK blogs: http://www.askqv.com/blogs/

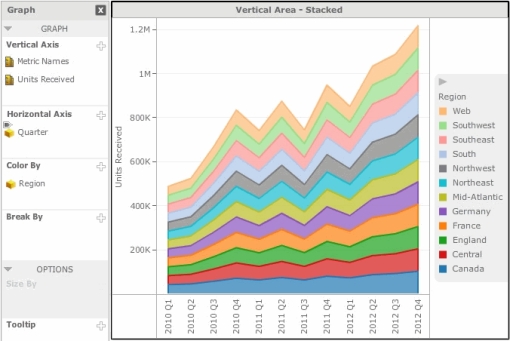

22. Text Tables:

23. 167 years ago: https://www.propublica.org/nerds/item/infographics-in-the-time-of-cholera

24. QlikSense 3.0:

25. Pareto: http://vizwiz.blogspot.com/2016/05/the-data-school-gym-timeline-pareto.html

26. Tableau 10: https://www.tableau.com/about/blog/2016/5/tableau-10-unification-54263

also:

https://www.interworks.com/blog/kfontenot/2016/05/02/tableau-10-beta-first-impressions

and cross-DB filtering:

and cross-DB joins: http://mkt.tableau.com/video/10.0_cross_database_join_-_wildcard_union.mp4

27. Qonnection 2016:

28. Tableau tips: https://www.tableau.com/about/blog/2016/5/5-tips-effective-visual-data-communication-54174

also:

https://www.tableau.com/about/blog/2016/5/culture-innovation-starts-self-reliant-team-members-54110

29. Directional Lollipops: http://vizwiz.blogspot.com/2016/05/tableau-tip-tuesday-how-to-create.html

30. Oracle DV Desktop:

http://www.oracle.com/technetwork/middleware/bi-foundation/dvdarena-2997628.jpg

also http://www.siebelhub.com/main/2016/05/oracle-data-visualization-desktop.html

and

http://beyond-just-data.blogspot.com/2016/04/oracle-data-visualization-desktop-dvd.html

and

31. Tile Maps:

http://sirvizalot.blogspot.com/2016/05/how-to-small-multiple-tile-map-in.html?m=1

32. Trillion Rows:

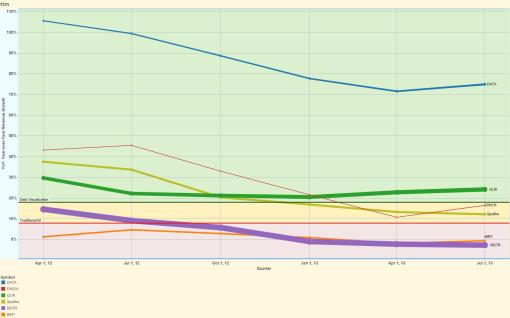

33. Power BI is trying hard:

https://www.linkedin.com/pulse/5-reasons-why-power-bi-taking-over-tableau-best-tool-tacoronte

34. Advizor Solutions Overview (http://www.advizorsolutions.com/software/products/ ):

6.0. October 2010. Around 10/10/10 the

6.0. October 2010. Around 10/10/10 the