Cluster analysis stayed inside academic circles for a long time, but recent “big data” wave made it relevant to BI, Data Visualization and Data Mining users

because big data sets in many cases just an artificial union of almost unrelated to each other big data subsets.

Cluster analysis usually can be defined as method to find groups of objects such that the objects in a group will be similar (or related) to one another and different from (or unrelated to) the objects in other groups. Main reason to use such method is to …reduce the size of large data sets! Some people confuse the clustering with classification, segmentation, partitioning or results of queries – it will be a mistake.

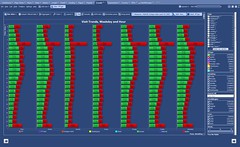

Clustering can be ambigous, like on picture below and depends on type of

clustering (e.g. partitional, separated, center-based, contigous, density-bases, hierarchical) and algorithm (e.g. K-means).

Most popular approach is Partitional K-Means clustering, where each cluster is associated with a centroid (center point), each point is assigned to the cluster with the closest centroid and the number of clusters (which is K !) must be specified. The basic algorithm is very simple:

- Select K points as the initial Centroids

- REPEAT

- Form K clusters by assigning all points to the closest Centroid

- Recompute the Centroid for each cluster

- UNTIL “The Centroids don’t change or all changes are below predefined threshold”

Image below demonstrates the importance of choosing initial Centroids and 6 Iteration leading to successful K-Means based Clustering:

K-Means algorithm is sensitive to size of clusters, densities of datapoints, non-globular shapes of clusters and of course to outliers, but in combination with proper Data Visualization those problems can be solved in most cases.

Basically Clustering is optimizing the cohesion within the cluster while maximizing the separation between cluster and datapoints outside of the cluster:

Leave a comment